Running BERT without Padding. Contribute to bytedance/effective_transformer development by creating an account on GitHub.

BERT Tokenization problem when the input string has a . in the string, like floating number · Issue #4420 · huggingface/transformers · GitHub

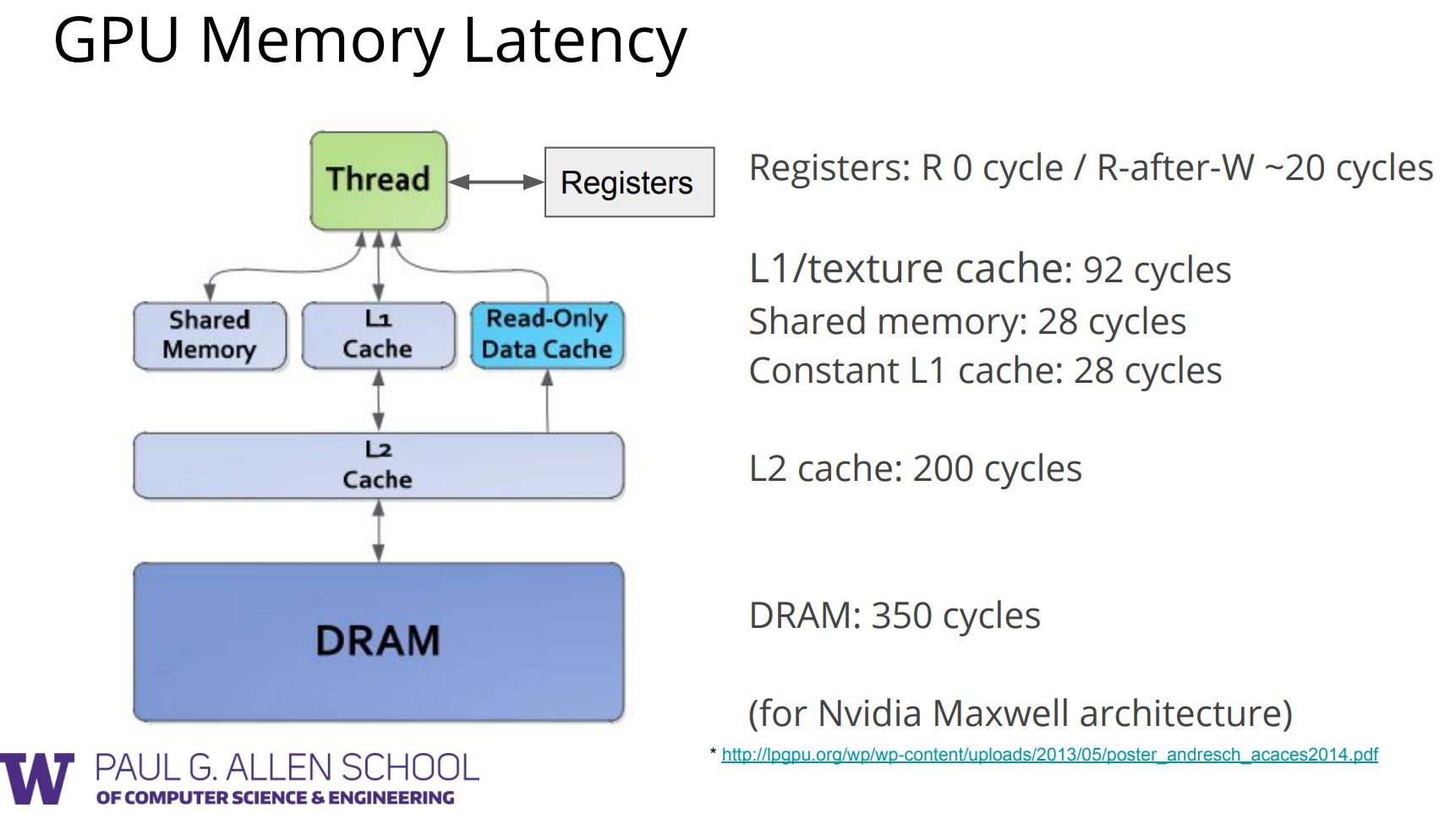

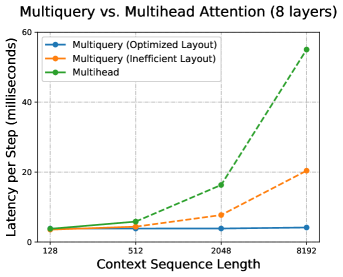

CS-Notes/Notes/Output/nvidia.md at master · huangrt01/CS-Notes · GitHub

PDF) Packing: Towards 2x NLP BERT Acceleration

process stuck at LineByLineTextDataset. training not starting · Issue #5944 · huggingface/transformers · GitHub

What to do about this warning message: Some weights of the model checkpoint at bert-base-uncased were not used when initializing BertForSequenceClassification · Issue #5421 · huggingface/transformers · GitHub

Aman's AI Journal • Papers List

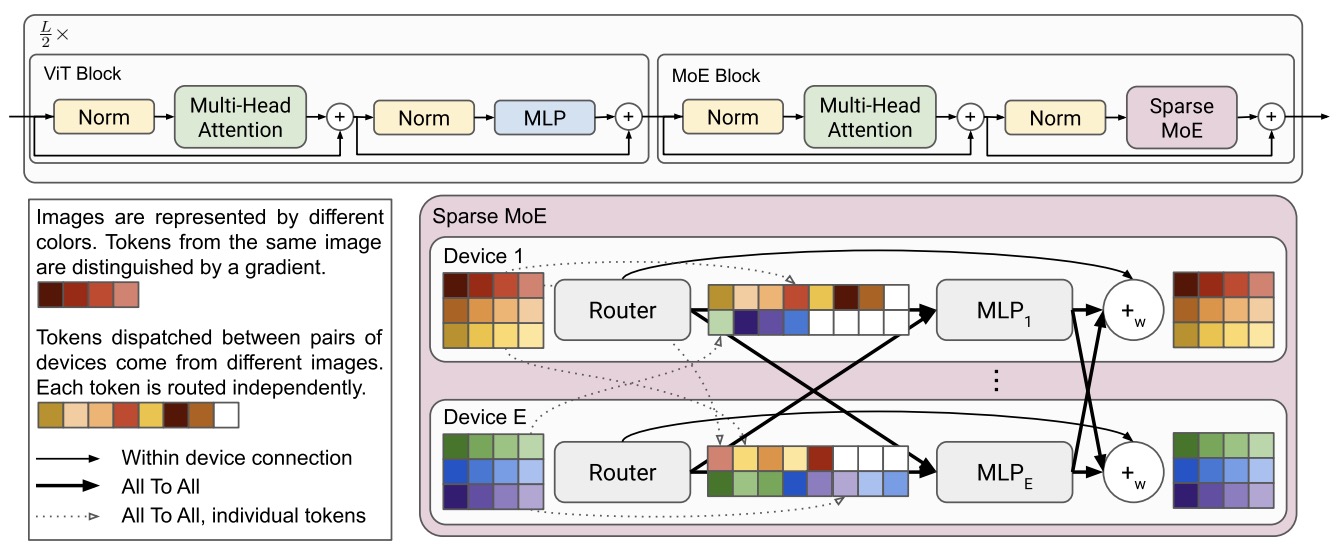

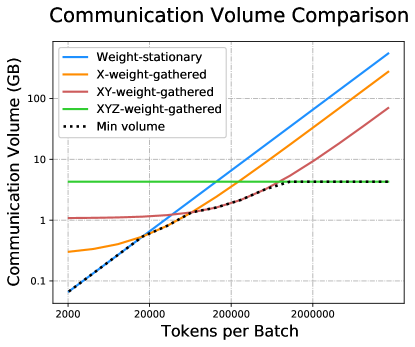

2211.05102] 1 Introduction

2211.05102] 1 Introduction

Use Bert model without pretrained weights · Issue #11047 · huggingface/transformers · GitHub